AI Agents Are Becoming the Next Computing Platform: Signals Across AWS, Azure, and Google (Through the Lens of re:Invent 2025)

AI Agent Architecture Series — Part 1

I spent the week at AWS re:Invent 2025; not just in keynotes, but in dozens of deep conversations with AWS engineers, enterprise architects, platform teams, and founders. Nearly every discussion circled the same questions:

How do we move from LLM copilots to real agentic systems?

What’s blocking teams today?

What does an “AI-native” architecture actually look like in the real world?

Across these conversations, a diverse set of real-world patterns emerged: teams experimenting with early agents, teams struggling with debugging and visibility, teams exploring hybrid GPU strategies, and teams trying to understand how to operate and govern AI systems at scale.

This is also the world I operate in every day.

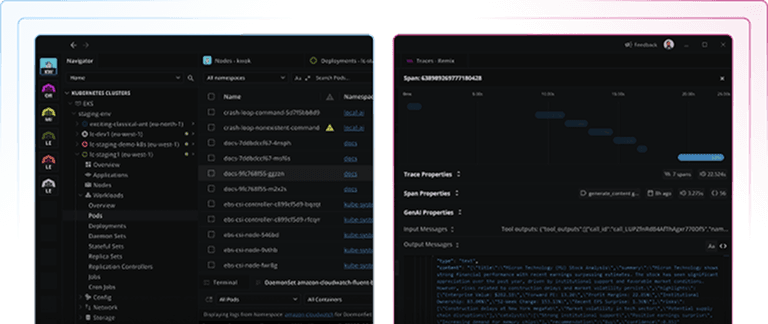

As the founder behind Lens K8S IDE, Prism AI, and Lens Loop — products used by more than one million developers globally working with Kubernetes, AI/LLM systems, and OpenTelemetry — I have a front-row seat to how these technologies are converging in real time.

Between what I saw on the ground at re:Invent and what we observe across our global user base, a consistent story has emerged:

The industry is converging on a new compute architecture:

AI agents at the top, foundation models in the middle, Kubernetes underneath, and OpenTelemetry connecting the entire system.

This article isn’t a recap of AWS announcements. It’s a synthesis of where the tectonic plates of cloud, AI, and enterprise architecture are shifting, across AWS, Azure, and Google Cloud, and what this means for engineering leaders preparing for the agentic era.

Agents Are Becoming First-Class Operational Entities

Across all major clouds, the same architectural primitives are emerging.

While implementations and maturity differ, the underlying direction is strikingly consistent.

AWS: AgentCore Policies, Evaluators & CloudWatch GenAI Observability

AWS introduced a tightly integrated set of capabilities:

- AgentCore Policies: Natural-language policies compiled into Cedar, providing deterministic control over what agents can and cannot do

- AgentCore Evaluators: Continuous evaluation of correctness, helpfulness, safety, and regression

- CloudWatch Generative AI Observability: End-to-end visibility into agent workflows, including:

- Prompt flows and reasoning steps

- Tool calls

- Token usage and latency

- Errors, throttling, and cost attribution

This treats agents not as experimental add-ons, but as production-grade software systems.

Azure: Evaluation, Governance & Telemetry via Azure AI Foundry

Azure mirrors the same primitives through different building blocks:

- Azure AI Foundry Observability: OpenTelemetry-based traces, metrics, and logs exported to Azure Monitor / Application Insights

- Azure AI Foundry Evaluations and the Azure AI Evaluation SDK: Structured evaluation workflows for models and agents, before and after deployment

The naming differs, but the intent is the same: measurable, governable AI systems.

Google Cloud: Vertex AI Agent Builder & Gen AI Evaluation

Google’s stack follows the same pattern:

- Vertex AI Agent Builder: A platform for building and operating Gemini-powered agents with tools, state, and workflows

- Agent Engine runtime: Dashboards for agent traces, token usage, latency, and errors

- Vertex Gen AI Evaluation: Evaluation of model and agent behavior, including multi-step reasoning trajectories

The Macro Shift

Across AWS, Azure, and Google Cloud:

Agents are being promoted from “assistant features” to “operational entities” — with policies, evaluation, observability, and lifecycle management.

This marks the emergence of a new execution layer in cloud computing.

Frontier Agents Point to the Era of “Software Co-Workers”

AWS also introduced Frontier Agents, a preview family of long-running autonomous agents:

- Kiro — an autonomous development agent

- AWS Security Agent — context-aware security workflows

- AWS DevOps Agent — CI/CD guardrails, telemetry correlation, and root-cause analysis

These systems are:

- Long-lived

- Stateful

- Tool-using

- Integrated into real developer and operations workflows

- Capable of operating for hours or days

Azure AI Foundry Agents and Gemini-based agents in Vertex AI Agent Builder follow the same trajectory.

The shift is unmistakable: agents are moving from interfaces to infrastructure.

The Model Layer Is Becoming a Commodity Platform

Across all clouds, model ecosystems are expanding, but also standardizing.

AWS Bedrock

- Nova 2 family (Lite / Pro / Sonic / Omni)

- Nova Forge, enabling custom domain-specific “Novella” models

- 18 fully managed open-weight models from multiple vendors

Azure AI Foundry

- Unified access to OpenAI and Azure-first models

- Governance and evaluation integrated by default

Google Vertex AI

- Gemini models

- Model Garden with open-weight options

The important shift is not which model you choose, but what surrounds it:

As models become increasingly interchangeable, differentiation moves to tools, skills, policies, evaluation, observability, and infrastructure.

Kubernetes Is Quietly Becoming the AI Execution Substrate

The AI narrative gets the attention, but the infrastructure story is just as important.

AWS

- Amazon EKS Hybrid Nodes: Allowing on-prem GPU servers to join EKS clusters

- AI Factories: Trainium and NVIDIA UltraServers deployed on-prem with AWS managing the control plane

Azure

- AKS as the backbone for hybrid GPU and AI workloads

Google Cloud

- GKE as a core platform for distributed and hybrid ML/AI systems

The shared direction is clear:

AI workloads are increasingly landing on Kubernetes as the universal execution substrate — across cloud, hybrid, and edge environments.

This aligns with what we observe across the Lens ecosystem: Kubernetes has become the natural home for GPU scheduling, isolation, tenancy, and AI workload orchestration.

The Emerging Agent Stack (Across Clouds)

A common architecture is taking shape:

1. Agents: Long-running autonomous workflows with clear responsibility boundaries.

2. Skills & Tools: APIs, domain logic, runbooks, and knowledge sources.

3. Foundation Models: Nova, GPT, Gemini, and open-weight models — increasingly swappable.

4. Policy & Evaluation Layers: AWS: AgentCore Policies + Evaluators

- Azure: Responsible AI + Foundry Evaluations / Evaluation SDK

- Google: Vertex Gen AI Evaluation

5. Observability (OpenTelemetry Everywhere)

- CloudWatch Generative AI Observability

- Azure AI Foundry Observability

- Vertex AI Agent Builder monitoring

- OpenTelemetry Gen-AI semantic conventions

6. Substrate

- EKS / AKS / GKE

- Hybrid GPU nodes

- AI Factories and equivalents

Alongside cloud platforms, emerging open standards such as the Model Context Protocol (MCP) are beginning to shape how agents connect to tools, data sources, and services. MCP fits naturally into Kubernetes-based environments, where agents and context providers can be deployed, secured, and scaled as first-class workloads.

This is the next evolution of cloud-native architecture.

Why This Matters for Engineering Leaders

We are no longer designing “AI features.” We are designing AI systems that behave like software organisms.

The core questions shift from:

- “What’s the right prompt?”

to:

- What does each agent own inside our organization?

- How do we enforce safe, auditable actions?

- How do we observe reasoning paths, not just outcomes?

- How do we detect behavioral drift as models change?

- How do we control cost, latency, and quality over time?

- When, and why, does a multi-agent architecture actually make sense?

These are architectural decisions that will shape engineering organizations for years to come.

What’s Next: Skills First, Not Multi-Agent First

One theme came up repeatedly at re:Invent, and it’s something we’re also seeing across real-world systems today.

Many teams are rushing into multi-agent architectures before they’ve built a single agent with well-defined skills, tools, and operational boundaries. The pattern feels familiar to anyone who lived through the early microservices era.

In future writing, I’ll explore this idea further: why starting with skills and clear ownership matters, where multi-agent designs genuinely add value, and how early architectural choices can either accelerate — or quietly constrain — agentic systems over time.